Introduction to 3D Model Creation for Avatars

Creating a compelling conversational AI avatar requires more than just a voice and a chatbot backend; it needs a visual representation that is both realistic and capable of dynamic interaction. This is where the process of 3D model creation becomes crucial. The 3D avatar serves as the face of your AI, providing a tangible presence that enhances user engagement and builds a stronger connection than purely text-based or audio-only systems.

Unlike static 3D models used in animation or visualizations, avatars for real-time conversation demand specific characteristics. They must be optimized for rendering in a web browser environment, meaning they need efficient geometry, textures, and rigging. Crucially, they need mechanisms for facial animation, including lip-syncing and emotional expressions, driven by external data sources.

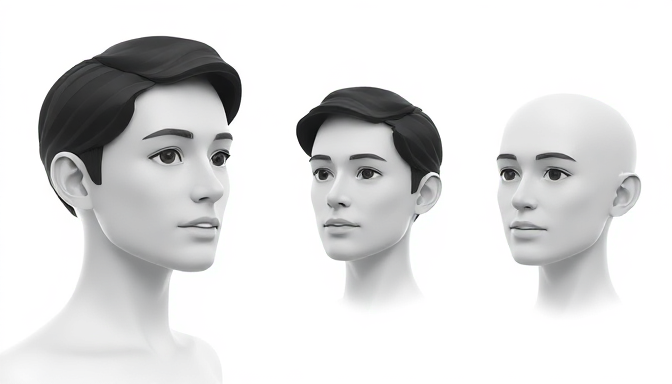

Our approach, as outlined in the book's architecture, begins with processing user video to extract key biometric data, specifically facial landmarks. This data isn't just for identification; it contains the unique contours and expressions of the user's face. The challenge is transforming this raw facial data into a fully rigged and textured 3D model that accurately reflects the user's likeness.